Part 2 — Expert, Practitioner, Novice: How Mental Modes Shape Learning and AI Collaboration

Why Reflective Human Practitioner/Expert Working with Super AI Expert—Will Thrive in the Age of AI

What happens when we try to learn, work, or collaborate with others who think differently?

Some people rely on instincts. Others slow down and ask questions. Some dive in like explorers.

In Part 1, we explored how our minds switch between two types of thinking:

System 1 — fast, pattern-based thinking

System 2 — slow, effortful thinking that builds new understanding

Now in Part 2, we introduce KK Aw’s framework of mental modes—Expert, Practitioner, and Novice—to explain how we learn, grow, and collaborate better in an AI-driven world.

🔍 Mental Modes: KK Aw's Framework for Productive Thinking

KK Aw, a systems thinker and innovator, proposed that all human thinking—especially in collaborative settings—operates within one of three distinct mental processing modes. The key to productive learning and collaboration lies not just in recognizing which mode we’re in, but also in knowing when and how to switch modes as the situation demands.

🧠 Three Core Mental Modes

Expert Mode / Production Mode (System 1 Thinking):

What it is: Fast, automatic, and intuitive. Relies heavily on prior experience and well-practiced patterns.

Strength: Extremely efficient for familiar, repetitive tasks.

Limitation: Tends to reject anything unfamiliar or confusing. No new knowledge is created in this mode.

Used by: Subject matter experts, supervisors, and seasoned professionals during routine operations.

Learning Mode (System 2 Thinking):

What it is: Slow, deliberate, and open-minded. New information is held in a mental “cache” for further processing.

Strength: Encourages curiosity, experimentation, and the creation of new knowledge.

Limitation: Time-consuming and mentally demanding.

Used by: Children, students, and novices who are exploring unfamiliar territory without a preset map.

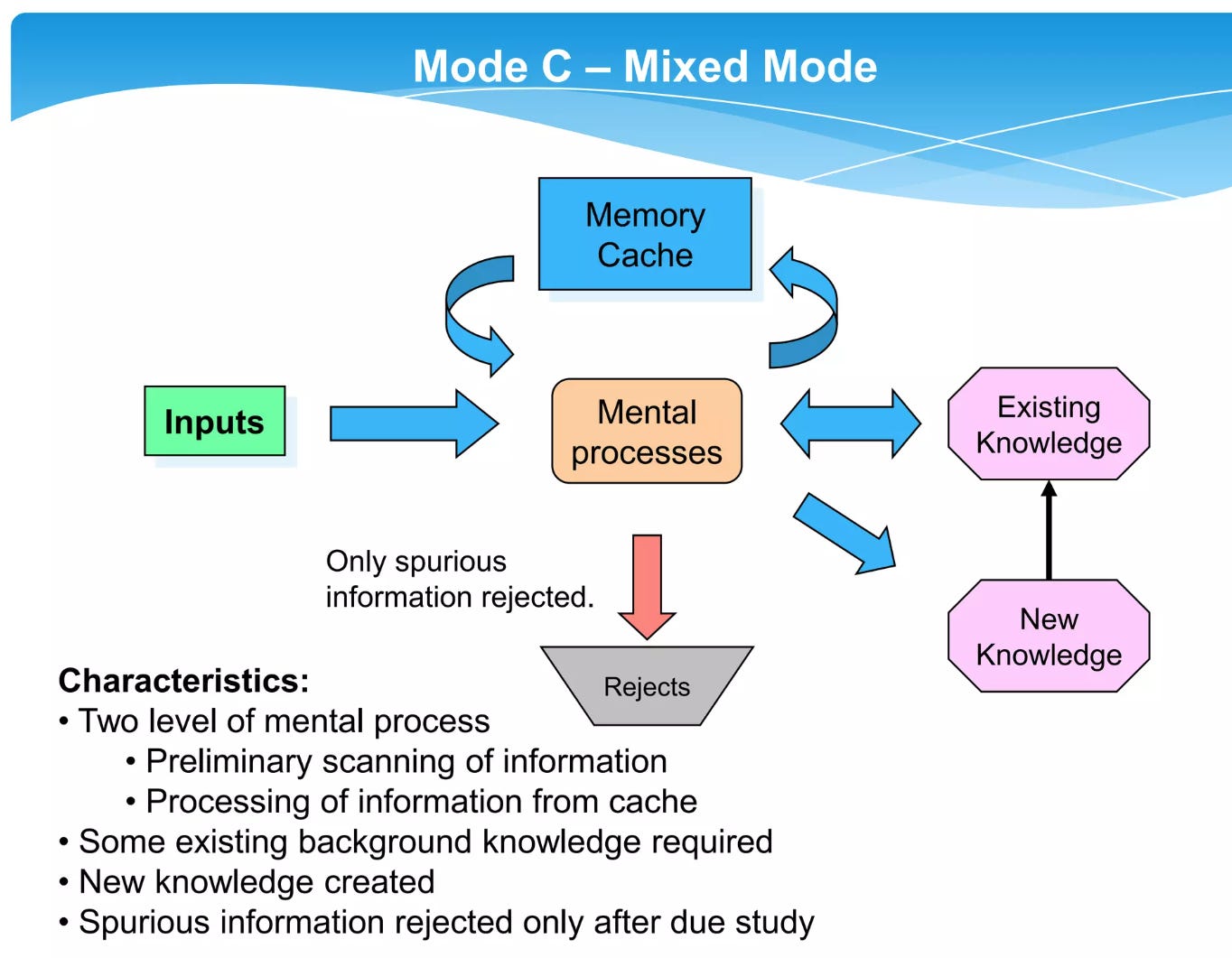

Mixed Mode (Practitioner Mode):

What it is: A balanced mode that uses quick scanning followed by deeper analysis. Combines the speed of prior knowledge with the openness of learning mode.

Strength: Allows for informed yet flexible decision-making.

Limitation: Requires a decent foundation of prior knowledge to function effectively.

Used by: Mature learners, reflective practitioners, and those engaging with semi-familiar or evolving domains.

What made KK Aw’s insight powerful is this:

Our ability to learn and collaborate depends not only on our mode but also on our ability to switch modes depending on the topic and the people around us.

👥 Why Mode Switching Matters

Imagine a school project group with three students:

Alex knows the topic well and jumps straight into action (Expert mode).

Bree has done some research and asks clarifying questions (Practitioner mode).

Chris is new to the subject and wants to understand the basics (Novice mode).

If Alex insists on doing everything quickly, Bree and Chris feel left out. If Chris dominates the session with endless questions, the group slows down. But if they recognize their roles, they can adapt:

Alex slows down to explain (shifting toward Practitioner).

Bree bridges the gap and checks understanding.

Chris listens actively and gradually moves toward Practitioner.

This is mode-switching. And it’s what makes collaboration work.

Now imagine this not just with classmates, but across age groups, professions, and—yes—humans and AI.

🧰 AI: A Super-Expert, But Not Reflective

In today's world, AI has become a powerful Super-Expert:

Trained on massive, multimodal datasets

Able to recognize patterns across languages, logic, design, and data faster than any human

Always available, highly scalable, and generally consistent in quality—though its answers may vary slightly due to how it generates answers.

But there’s a catch.

AI lacks the reflective self-awareness of a human mind. It doesn’t know what it knows. It doesn’t reflect, feel doubt, or recognize when it needs to shift modes. Even when we prompt it to simulate a dialogue between an "expert" and a "practitioner," the true value comes only when a human drives that interaction with purpose.

So the ideal pairing is:

AI Super-Expert + Reflective Human Practitioner or Expert

In the age of Super-Expert AI, even the most knowledgeable human expert becomes—by comparison—an advanced practitioner. Human experts may be deeply experienced in a particular domain, but no one can rival the scale and breadth of AI’s knowledge and pattern recognition.

That’s not a disadvantage—it’s an invitation to collaborate wisely.

When humans lean into their strengths—insight, empathy, context, reflection—they bring something to the partnership that AI alone cannot: the ability to adapt, question, and creatively recombine.

This is the most fertile ground for insight and learning.

🏆 The Power of Reflective Human + AI

Let’s look at some mental mode pairings:

The lesson?

Students must learn how to become practitioners first.

Professionals must stay reflective even when working fast.

AI should be treated as a thinking partner—not a crutch or answer machine.

It can model, coach, and guide like a great tutor—but it should never replace your own effort to learn.

🧭 The Crucial Design Question

All of this leads us to one unavoidable question:

How do we design and regulate these systems to prioritize our long-term cognitive well-being over the fleeting allure of short-term convenience?

This is not just a philosophical problem—it’s a design problem, an educational problem, and a societal one.

The temptation is real: Let AI do the thinking. Let the interface be smooth. Let the friction disappear.

But the cost of frictionless convenience may be long-term mental atrophy.

That’s why we built Soda—a GPT-powered tutor designed from the ground up to resist becoming a shortcut. Soda engages learners through reflection, journaling, practice, and mastery. It keeps students in System 2 thinking mode long enough to embed knowledge into System 1.

Because only then can they work with AI as true collaborators.

🎓 Why This Matters for Teen Learners

Many teens today are digital natives. They’re comfortable asking AI to write essays, summarize texts, or solve math problems.

But here’s the danger:

If you let AI do all the thinking, your brain won’t learn to think.

Like we saw in Part 1, System 2 effort is required to embed knowledge into System 1. Without that effort, you never reach true mastery. You never become a practitioner. You stay a novice.

KK Aw's mental model shows why learning with AI can be amazing—but only if the human brings reflection to the table.

In Part 3, we introduce Soda: a custom GPT tutor designed to help students become practitioners first, so they can one day collaborate with AI like true professionals.

📘 This article is Part 2 of the Thinking With AI series.

📝 Jin Lee, Founder of Peconaq Inc. • Co-written with ChatGPT